I think one of the main keywords of 2023 so far has been “Chat GPT.” Of course, the translation industry has been far from immune from its impact.

So: let’s first think about this notion of “translation.” In my case, I work as a translator. This means that, every week, I spend many hours working through Japanese texts, researching the nuances of words and technical terms, drafting translations in English, and then polishing the translation so that it reads like a text that was originally created in English, with appropriate terminology and expressions, following all English style conventions and formatting.

Completing a translation is a multi-stage process, and this is something that involves many aspects of technology, and more recently, AI. These days, I generally work on translations using translation software (“computer-assisted translation”), but there is definitely a growing trend within the industry to incorporate some form of MT (machine translation) into the process to improve efficiency.

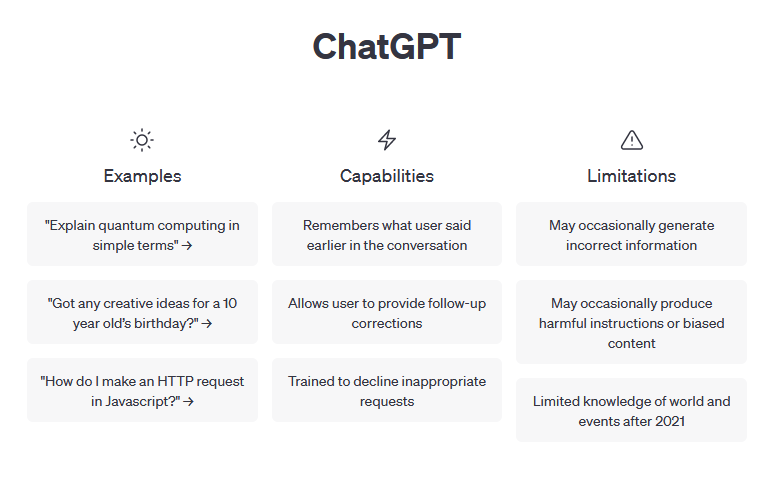

Anyway, Chat GPT is something that emerged at the beginning of the year. In my circle of translators, there was an initial excited buzz of “how can we utilize this technology to make our translation work even more efficient?” People were sharing different ways how it could be used: how they consulted Chat GPT to rephrase specific expressions for more natural options, how they would ask GPT for the meaning of acronyms that they couldn’t figure out by themselves, how they would have Chat GPT “rewrite” a target translation for a specific context (i.e., as part of an instruction manual, or for a contract). I followed all of these suggestions with deep interest, and initially tried implementing some of them in my own translation process, to varying degrees of success.

For about a week, I kept a tab open with Chat GPT while I worked. When I came across something that I needed help with, I would try to ask Chat GPT for help.

Unfortunately, the outcome was not great.

I found that, more often than not, I would be able to find the answer with more confidence by using a search engine like Google (the conventional process of using keywords to find the answer).

The reason for this is simple: translation is a process built on precision and accuracy. Everything translated – every term, every acronym, every set phrase – is selected for a reason. You can’t just translate a phrase arbitrarily without contemplating the background, or the context, of the text. In most cases, there is a set term, or a set phrase, used to express a specific context. This consistency is necessary for all industries to operate seamlessly across the world.

So when Chat GPT starts giving me answers, I can’t help but doubt if they’re correct in my case, if it’s really the answer that I’m looking for. Chat GPT doesn’t give sources, which is another major issue for me. There’s no transparency about where the information is coming from. Everything you do in translation needs to be justified – why did I choose that word in that context? Using Google and searching with keywords enables me to search with confidence – I know that this source is trustworthy; I know that this is a reliable resource. For me, using Chat GPT as a resource is on par with citing a rumor as an information source in an academic paper. “My friend said so” doesn’t exactly cut it, right?

I think that, ultimately, the value of Chat GPT lies in how you use it. Some friends gush about Chat GPT because it helps them with coding (apparently Chat GPT is great for coding!) and other types of logical problem-solving. Chat GPT also helps others write business emails or reports. In terms of language learning, and asking about the nuances of phrases or words, Chat GPT can be priceless. For general templates (creating emails and the like) or getting everyday tasks automated, I think it can be useful.

Unfortunately, I don’t see Chat GPT becoming a part of my work process at the moment, and I feel like I receive better, more reliable answers from Google (because I can see where the answers are coming from).

Chat GPT also has a tendency to “hallucinate,” so it can be prone to making up nonsense when it doesn’t know. So… for me, I won’t be relying on it too much, but I definitely recognize its value as a tool, and won’t discount using it in future. But for now, I’ll stick with consulting the internet.